A couple of weeks ago I decided to take a look into one of our service logs, searching for endpoints that could be improved. In order to narrow down my search, I looked straight to the ones that could have the most impact. To evaluate that I used the following metric: response meantime (similar to average) * a number of requests that are provided by AppSignal.

After applying the calculation to all the requests I decided to optimize an endpoint whose purpose was to retrieve a list of restaurants ordered from the closest to the farthest from the position the user is. The endpoint was executing the following SQL query:

SELECT id, ST_Distance_Sphere(ST_MakePoint(112.15769, 25.28552), r0."location") AS distance

FROM "restaurant" AS r0

WHERE ST_Distance_Sphere(ST_MakePoint(112.15769, 25.28552), r0.location) < 400

ORDER BY distance

LIMIT 20 OFFSET 0;

When I wrote this query I didn’t think much about it. I knew that I wanted the distance displayed and filtered and I could do that using the ST_Distance_Sphere function. While the database had a small number of restaurants, the query was quite fast, but as soon as more restaurants were added, the endpoint response slowdown was quite noticeable.

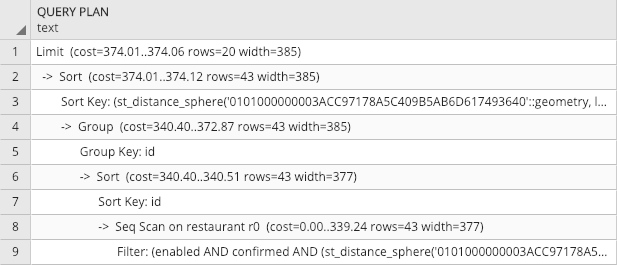

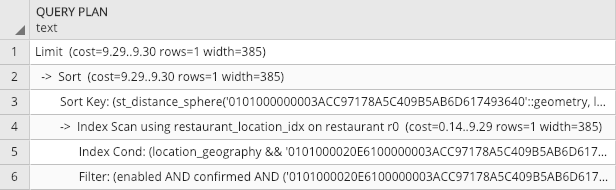

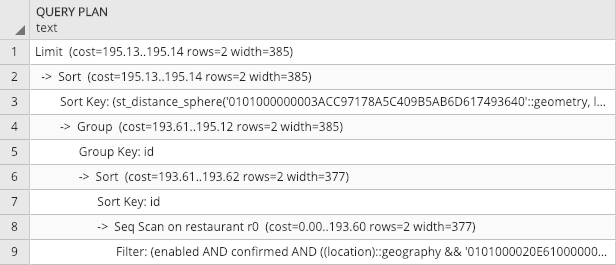

In order to have a good reference point on the performance cost, I used the EXPLAIN keyword behind the query. EXPLAIN not only gives the query cost, but also a detailed view where the query is taking more time, if it is using an index, etc. The query cost for this query was 374.

Query Plan using EXPLAIN on the query displayed above

Query Plan using EXPLAIN on the query displayed above

I knew one way to optimize a search query was to create a new index, but as I didn’t have much experience with PostGIS I decided to find the proper way to optimize this query.

The first thing I noticed after a couple of searches was a post from 2013 in the PostGIS page that suggested to replace ST_Distance in the WHERE clause with ST_DWithin. But because the radius distance was passed as a parameter in meters (instead of an SRID distance when used geometry), I needed to convert the two points, the user location, and the restaurant location, to geography points instead of geometry, which I was using by default. That can easily be done by appending ::geography to geometry object or just replace ST_MakePoint with ST_GeographyFromText(´POINT(112.15769 25.28552)’).

If you are looking for the difference between geometry and geography types, please visit this link.

This is how the query looked like with after the change:

SELECT id, ST_Distance_Sphere(ST_MakePoint(112.15769, 25.28552), r0."location") AS distance

FROM "restaurant" AS r0

WHERE ST_DWithin(ST_GeographyFromText('POINT(112.15769 25.28552)'), r0.location, 400)

ORDER BY distance

LIMIT 20 OFFSET 0;

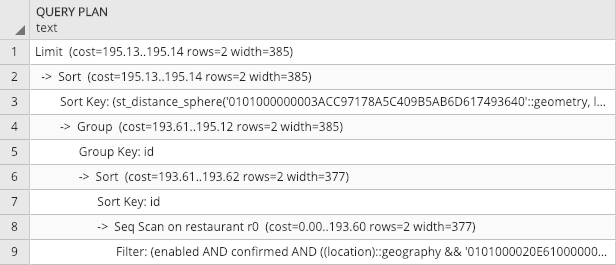

Running this query with this small modification, made the cost change from 374 to 195, a 48% improvement.

Query Plan using EXPLAIN with the SQL query that contains STDWithin.

Query Plan using EXPLAIN with the SQL query that contains STDWithin.

Because I wanted to order them by distance, I kept the ST_Distance_Sphere in both SELECT and ORDER BY. It will only run these calculations on a maximum of 20 rows (because I limited it to 20) or the number of restaurants inside our radius.

I knew that I could also create an index to take full advantage of ST_DWithin as explained here. As recommended, the index created should be of type GIST (Generalized Search Tree).

CREATE INDEX restaurant_location_idx ON restaurant USING GIST(location);

But running the same query with the new index created didn’t improve its cost. Looking into the EXPLAIN report I noticed that it was casting r0.location with ::geometry internally, bypassing the index and not using it to improve the query cost.

I then dropped the index, created a new column named location_geography, migrated the geometry data inside the location column to the geography column and created the index again.

ALTER TABLE restaurant ADD COLUMN location_geography geography(Point,4326);

UPDATE restaurant r SET location_geography = r.”location”::geography;

CREATE INDEX restaurant_location_idx ON restaurant USING GIST(location_geography);

I also took the opportunity to run VACUUM ANALYZE as it is a good practice when adding a new index.

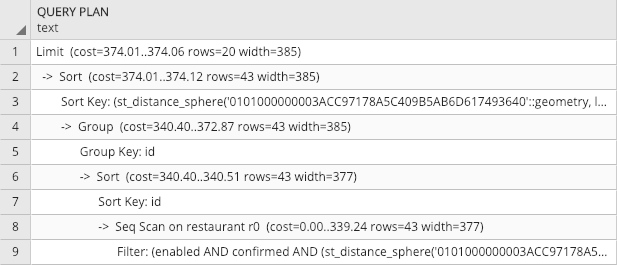

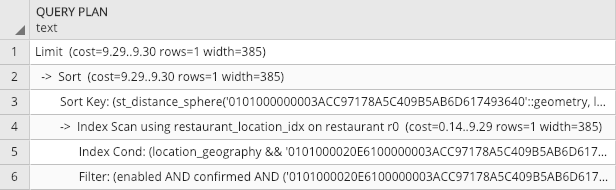

By replacing the location by location_geography I was able to reduce the query cost to an impressive 9! That was a whopping 97% improvement compared to our initial query cost.

Query Plan using EXPLAIN on a query with index

Query Plan using EXPLAIN on a query with index

PS — A good document to look up for examples of improvements is this pdf file presented on 2009 O’Reilly Opens Source Convention. Another good source of information is the Geographic Information Systems Stack Exchange.